1. Coding spaces for a class of interval maps

The starting point for this post is Franz Hofbauer’s paper “On intrinsic ergodicity of piecewise monotonic transformations with positive entropy”, which is actually two papers; both appeared in Israel J. Math, one in 1979 and one in 1981. He proves existence and uniqueness of the measure of maximal entropy (MME) for a map provided the following are true:

- there are disjoint intervals

such that

and

is continuous and strictly monotonic;

- the partition into these intervals is generating — that is,

is dense in

, where

denotes the set of all endpoints of the intervals

;

-

;

-

is topologically transitive.

Hofbauer’s proof proceeds by modeling using a countable-state Markov shift, and actually gives quite a bit of information even without topological transitivity, including a decomposition of the non-wandering set into transitive pieces, each of which supports at most one MME.

I want to describe an approach to these maps using non-uniform specification. These maps admit a natural symbolic coding on the finite alphabet obtained by tracking which interval

an orbit lands in at each iterate, and this is the lens through which we will study them.

To make this more precise, suppose that satisfies Condition 1 (piecewise monotonicity), and let

denote the set of all words

such that the following interval is non-empty:

The letter “I” can be understood as standing for “interval” or for “initial”, in the sense that an orbit segment is coded by the word

if and only if its initial point lies in

. In this case we have

where the “F” in this notation can be understood as standing for “following” (or “final” if you prefer). Observe that the intervals are precisely the intervals on which

is continuous and monotonic, and thus each

is an interval as well.

Exercise 1 Suppose that

is uniformly expanding on each

in the sense that there is

such that

whenever

, and prove that in this case Condition 2 (generating) is satisfied.

Exercise 2 Suppose that

satisfies Conditions 1 and 2. Show that

is the language of a shift space

, and that there is a semi-conjugacy

defined by

(The fact that this intersection is a single point relies on Condition 2.) Show that there is a countable set

such that

is 1-1.

Exercise 3 Show that if

is uniformly expanding on each

, then Condition 3 (positive entropy) is satisfied, and in particular

has positive topological entropy

.

Under Conditions 1–3, there is a 1-1 correspondence between positive entropy invariant measures for and positive entropy invariant measures for

, because the countable set

can support only zero entropy measures. Thus to study the MMEs for

, it suffices to study the MMEs for the shift space

.

In general one cannot expect this shift space to be Markov. However, it turns out that in many cases it does have the non-uniform specification property described in my last post, which will lead to an alternate proof of intrinsic ergodicity (that is, existence of a unique MME). One of my motivations for writing out the details of this here is the hope that these techniques might eventually be applicable to more general hyperbolic systems with singularities, in particular to billiard systems; piecewise expanding interval maps provide a natural sandbox in which to experiment with the approach before grappling with the difficulties introduced by the presence of a stable direction.

To this end, the goal for this post is to exhibit collections of words with the following properties.

- Decomposition: For every

, there are

and

such that

.

- Specification: There is

such that for every

, there is

with

such that

.

- Entropy gap:

.

Once these are proved, the main theorem from the previous post guarantees existence of a unique MME. (The specification property there requires , but this was merely to simplify the exposition; with a little more work one can establish the result under the weaker condition here.)

2. Exploring -transformations

Rather than stating a definitive result immediately, let us go step-by-step — in particular, we will eventually add another condition on (beyond Conditions 1–3) to replace topological transitivity, and the initial definition of

and

will only cover certain examples, with a modification required to handle the general case.

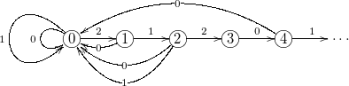

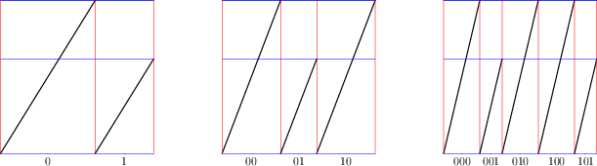

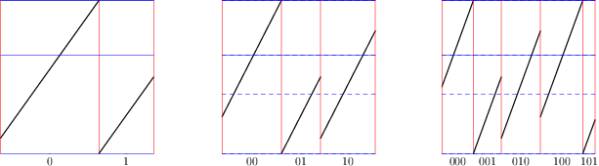

Start by considering the -transformation

when

, whose first three iterates are shown in Figure 2. Labeling the branches with the alphabet

, we see that

and

; in the figure, these intervals are marked on both the horizontal and vertical axes. Clearly

, and for this specific choice of

we have

, so

.

Looking at the second picture in the figure, which shows the graph of , we can see that

The third picture shows a similar phenomenon for , with

when

, and

when

.

Exercise 4 For this particular map

, show by induction that

if

ends in a

, and

if

ends in a

. In particular, we have

for all

. Use this to deduce that

consists of precisely those words

that do not contain

as a subword. (Thus

is a transitive SFT and has a unique MME.)

The key step in this exercise is the observation that given and

such that

, the definition of the intervals

and

in (1) and (2) gives

and thus

In particular, if , then we have

, and thus

A similar argument replacing with a word

(and the first iterate

with

) gives the following lemma, whose detailed proof is left as an exercise.

Lemma 1 Given any map

satisfying Conditions 1 and 2, and any

, we have

Now consider the map for

; Figure 2 shows the first three iterates. Observe that we once again have

and

, so that the same argument as in Exercise 4 shows that if

is any word that does not contain

as a subword, then

. However, this is no longer a complete description of the language, since as the second picture in Figure 2 illustrates, we have

(equivalently,

, as seen in the first picture) — consequently

.

In Exercise 4, every word had the property that

; writing

for

and

, we can rewrite this property as

, which by Lemma 1 occurs if and only if

. These two properties can be seen on Figure 2 as follows.

-

: the vertical interval spanned by the graph of

on

is the same as the vertical interval spanned by the graph of

on

.

-

:

on the horizontal interval, the graph of

crosses the vertical interval

completely.

Looking at Figure 2, we see that now these properties are satisfied for some words, but not for all. Let us write

Exercise 5 Referring to Figure 2, prove that

and that

. In particular,

,

, and

lie in

but not in

. Show that on the other hand,

(at least for the value of

illustrated), so that words in

can have

as a subword.

The notation suggests that we are going to try to prove the specification property for this collection of words. The first step in this direction is to prove the following Markov-type property.

Lemma 2 Let

satisfy Conditions 1 and 2, and let

be given by (4). If

have the property that

, then

, where

(concatenation with the repeated symbol only appearing once).

Proof: Write where

and

, so that

. By Lemma 1, we have

Since and

, we have

, so

and we conclude that

where the last equality uses the fact that . This shows that

.

In the specific case of a -transformation with

, we can refer to Figure 2 and Exercise 5 to see that

contains the words

,

,

, and

. In particular, for any

, we can choose

to be the one of these four words that begins with the last symbol in

, and ends with the first symbol in

. Then

and

; applying Lemma 2 twice gives

, which proves the specification property.

3. Obtaining a specification property

In the general case of an interval map satisfying Conditions 1 and 2, we would like to play the same game, proving specification for by mimicking the proof of specification for a topologically transitive SFT: given

, we would like to let

be such that

and

; then given any

, we can put

and apply Lemma 2 twice to get

. Since

is finite, this would prove that

has specification with

.

But how can we guarantee that such a exists for every

? This does not follow from Conditions 1–3, and indeed one can easily produce examples satisfying these conditions for which there are multiple MMEs and the collection

defined in (4) does not have specification, because there are some pairs

for which no such

exists.

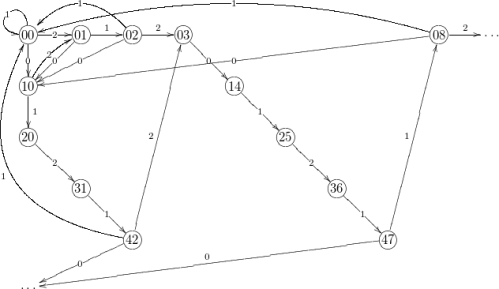

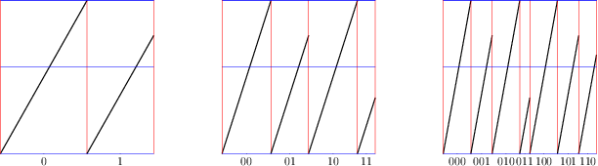

At this point it is tempting to simply add existence of the required words as an extra condition, replacing topological transitivity. But it is worth working a little harder. Consider the map

, three iterates of which are illustrated in Figure 3 for

and

.

For this , we see from the first picture that neither of

or

contains

, and thus there can be no word

such that

, since we always have

. It follows that

does not contain any words ending in

, which rules out a proof of specification along the lines above.

The story is different if instead of single letters, we look at words of length two. Observe from the second picture that

This suggests that we should modify the definition of in (4) to require that the follower set depends only on the last two symbols (instead of the last one). More generally, we can fix

and define

We have the following generalization of Lemma 2.

Proof: Using Lemma 1, we have

and since , we have

, so

, giving

since . This shows that

.

Now we can prove that has specification for the map

in Figure 3; as described above, it suffices to prove that if

are any two words from the set

, then there is some

. But this follows quickly from the fact that the transitions

are all allowed by (5), and that any word obtained by concatenating allowable transitions must lie in

by iterating Lemma 3.

This discussion motivates the following.

Definition 4 Given

, define a directed graph whose vertices are the words in

and whose edges are given by the condition that

exactly when

. Say that

has Property

if this graph is strongly connected, meaning that given any two

, there is a path in the graph from

to

.

Property is playing the role of topological transitivity, but the exact relationship is not quite clear yet. I’ll have more to say about this in my next post, and the formulation here is almost certainly not the “optimal” one, but since this is a blog and not a paper, let’s stick with this property for the time being so that we can formulate an actual result and not get lost in the weeds.

Proposition 5 Let

satisfy Conditions 1 and 2, and Property

for some

. Then

has specification.

Proof: By Property , for every

, there are

such that

. Thus

. Applying Lemma 3 repeatedly shows that

. Write

, so

.

Now let . Given any

with

, let

, so that

. One application of Lemma 3 gives

, and a second application gives

. Since

, this completes the proof.

4. Bounding the entropy of suffixes

Let be an interval map satisfying Conditions 1–3 and Property

. Proposition 5 shows that

has specification, so it remains to find

such that the decomposition and entropy gap conditions are satisfied.

For simplicity, let us first discuss the case . Given

, we want to write

where

and

lies in some yet-to-be-determined collection

. Since this collection should be “small”, it makes sense to ask for

to be as long as possible, and thus it is reasonable to take

, where

is the largest index in

with the property that

$latex \displaystyle

w_1\cdots w_m \in {\mathcal G}. \ \ \ \ \ (7)&fg=000000$

In particular, for every , we have

$latex \displaystyle

w_1 \cdots w_\ell \notin {\mathcal G}. \ \ \ \ \ (8)&fg=000000$

By Lemma 2, this implies that : indeed, if we had

, then using (7) and Lemma 2 would give

, contradicting (8).

This shows that if we put , then

lies in the collection

$latex \displaystyle

{\mathcal C}(a) := \{ v\in {\mathcal L} : av\in {\mathcal L}, av_1\cdots v_\ell \notin {\mathcal G} \ \forall 1\leq \ell \leq |v| \}.

\ \ \ \ \ (9)&fg=000000$

Taking gives the desired decomposition. But what is the entropy of

? First note that if

for some

and

, then

, so we can bound

in terms of

by controlling how many choices of

there are for each

.

The key is in the observation that and

are intervals that intersect in more than one point (since

), but that

(since

). The only way for this to happen is

if the interior of contains one of the endpoints of

. Since the interiors of the intervals

are disjoint, we see that for any

there are at most two choices of

for which

. It follows that

and iterating this gives

We conclude that

If , then this provides the needed entropy gap, and we are done.

But what if lies in

? This is where we need to use

instead of

. Given any

and

, let

$latex \displaystyle

{\mathcal C}^{(k)}(q) := \{v \in {\mathcal L} : qv\in {\mathcal L}, q v_1 \cdots v_\ell \notin {\mathcal G}^{(k)} \ \forall 1\leq \ell \leq |v| \}.

\ \ \ \ \ (10)&fg=000000$

Following the same idea as in the case , we see that every word in

is of the form

, where

and

are such that the intervals

and

intersect in more than one point (since

) but

(since

). The only way for this to happen is if the interior of

contains one of the endpoints of

. Since the interiors of the intervals

are disjoint (as

ranges over

), we see that for any

there are at most two choices of

for which

. It follows that

and iterating this gives

Together with Proposition 5, this proves the following.

Theorem 6 Let

be an interval map satisfying Conditions 1–3 and Property

for some

. Then the coding space

for

has a language with a decomposition satisfying the specification and entropy gap conditions; in particular,

has a unique MME.

Some natural questions present themselves at this point.

- How does Property

relate to the topological transitivity condition from Hofbauer’s paper?

- Does having Property

for some value of

tell us anything about having it for other values? (If so, one might hope to be able to remove the condition on

in Theorem 6.)

- Is there a more general version of Property

that we can use in Proposition 5 and Theorem 6?

I’ll address these in the next post. For now I will conclude this post by emphasizing the idea in the proof of (11): “if we go iterates in each step, then since each step only has two ways to be bad, we can bound the entropy of always-bad things by

”. This same idea plays a key role in the proof of the growth lemma for dispersing billiards (although in that setting one needs to replace “two ways” with “

ways” and the bound becomes

); see Chapter 5 of the book “Chaotic Billiards” by Chernov and Markarian, or Section 5 of “On the measure of maximal entropy for finite horizon Sinai Billard maps” by Baladi and Demers (JAMS, 2020) (in particular, compare equation (5.4) there with (11) above). The fact that taking

large makes this estimate smaller than the entropy is an example of the phenomenon “growth beats fragmentation”, which is important in studying systems with singularities.